Generic Machine Learning Orchestration Framework by Xeptagon

• Published 10:00 AM EDT, Mon Aug 22, 2022

In the current software industry, the use of machine learning models can be seen in many use cases. Data Scientists, Data Engineers, and Software Engineers from a variety of technical backgrounds implement these machine learning models with different technologies such as TensorFlow, PyTorch, Python, R etc. Most of the developers start their development on a notebook as a prototype and further enhance the model with the obtained results

However, when it comes to production deployment of these models there are multiple challenges that the developers will face, such as issues with scalability, availability, fault tolerance, model management, reusability etc. Data scientist or Data Engineers who don’t have prior experience in Software Architecture and DevOps technologies it will be a bigger challenge and time consuming. Various cloud solution providers such as AWS Sagemaker, Azure Synapse Analytics and Google Cloud Datalab identify this problem and offering few cloud services. However, these solutions still have many limitations in addition to the vendor lock-in with the cloud service provider.

Considering these limitations, Xeptagon designed and developed a cloud platform independent generic machine learning orchestration framework as a solution. Our framework is based on Python, FastAPI, Docker, Terraform, GitHub Actions and Kubernetes. The framework was developed to deploy machine learning models of an inter-governmental organization.

To start with, we defined a generic interface for the machine learning models. All the models need to implement this interface for the management service to manage the models in a generic way. In addition, the model developer can add any extra endpoints specific to the model. So the system has a generic management Swagger API and independent model Swagger APIs. Further, the models can be configured to run as an independent service or run in a shared service along with other models in the cluster. The model management service performs any global update as well as query actions on the models using the interface endpoints.

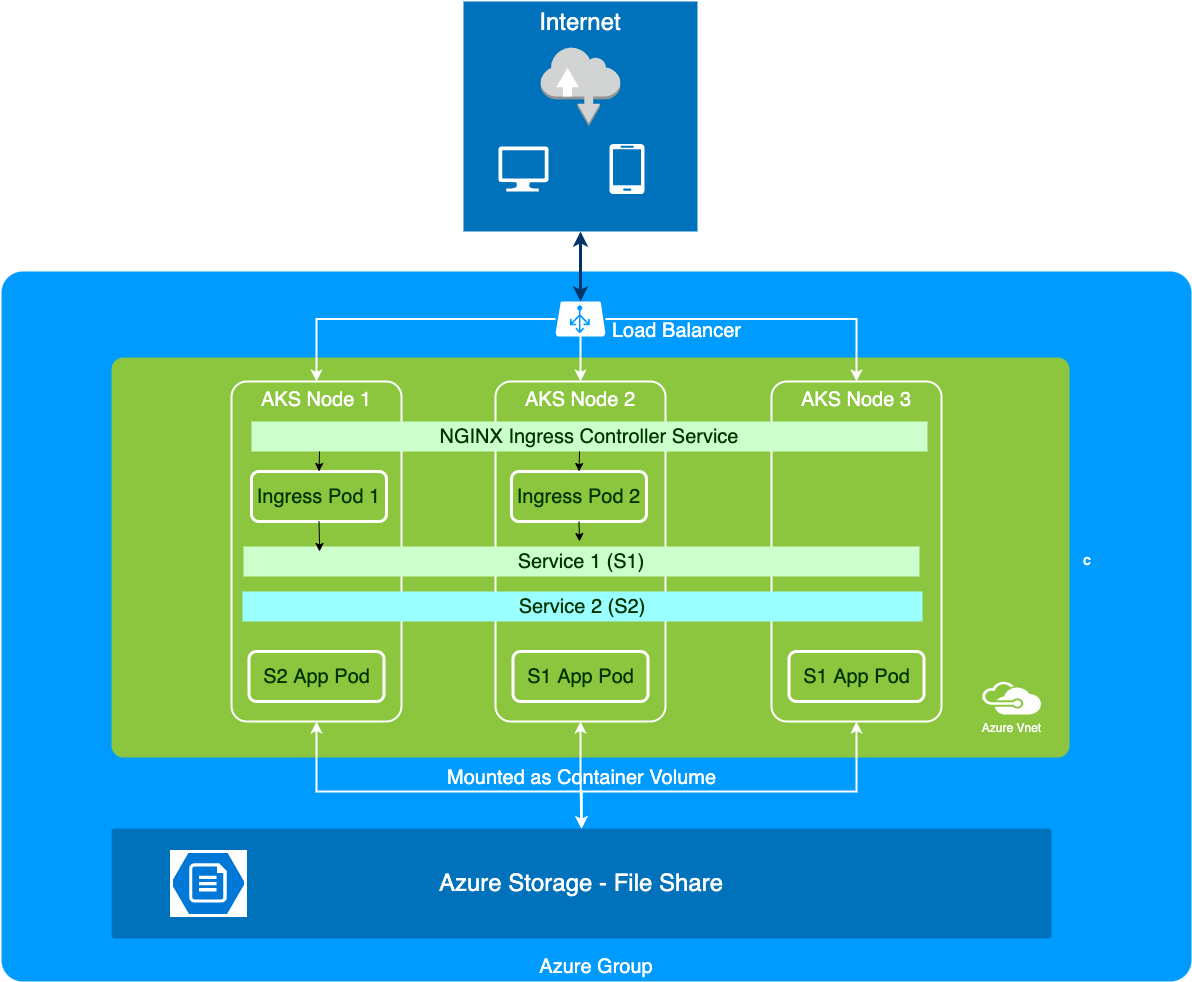

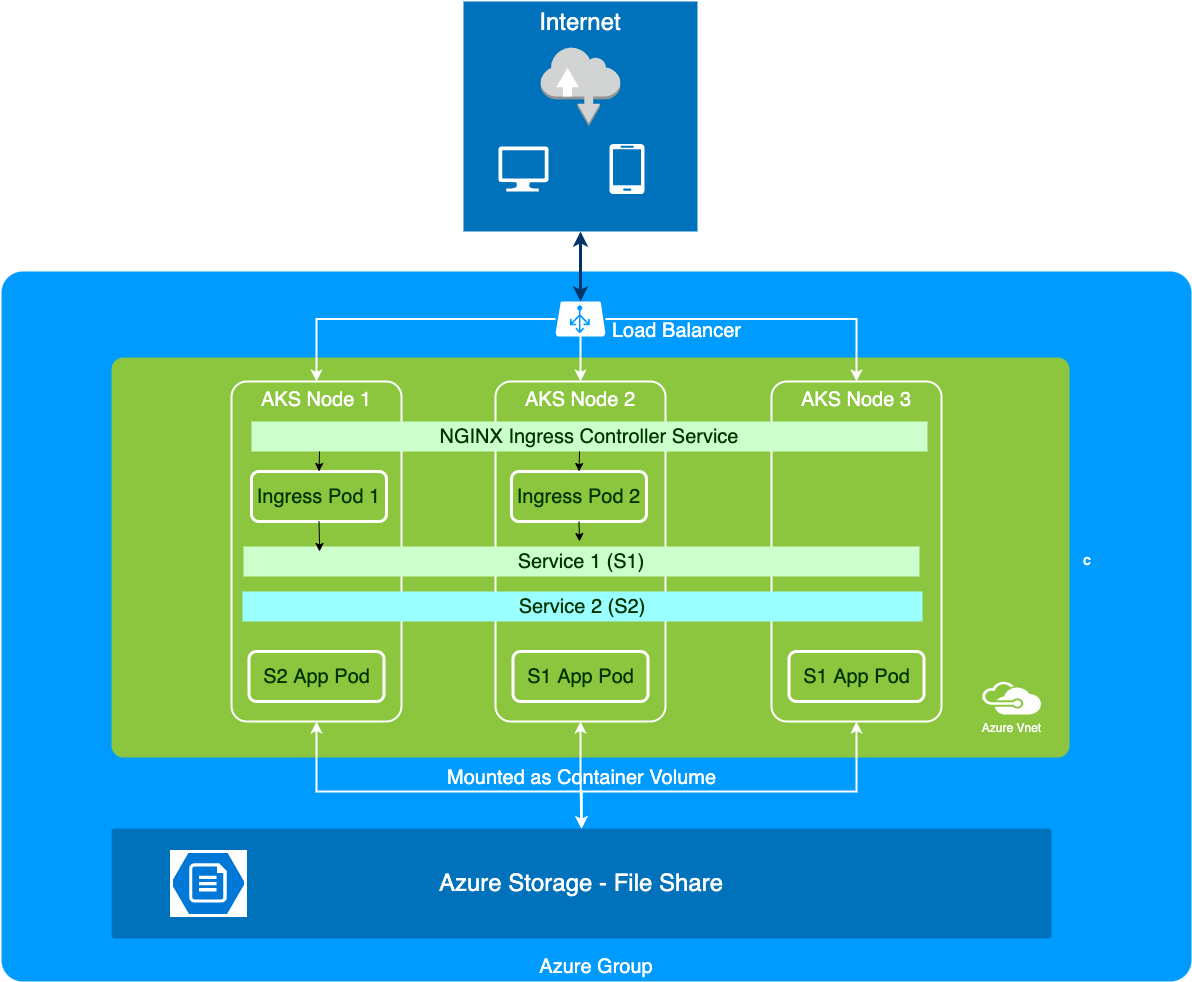

The system architecture of the framework is given below.

Model scalability, fault tolerance and availability were achieved by the capabilities in the Kubernetes. For scalability, we used two types of Autoscalers.

The model service instances shared common storage for datasets, with dynamic file updates visible to all the peer instances

- Horizontal Pod Autoscaler - Scale the number of pod replicas based on the pod resource utilizations (CPU, Memory)

- Cluster Autoscaler - Scale the number of nodes in the cluster based on the node resource utilization. Both are configured for minimum and maximum limits.

Once all the existing models are ported and deployed with our framework, the next challenge is on adding new models to the cluster. The framework was designed in a manner where model developers can deploy new models with minimal steps via a script included in the framework which will support basic Kubernetes parameter configurability. Once the script is executed successfully, it will update the Kubernetes scripts with the new model. Once the developer updates the code repository (GitHub in our case), the repository CI/CD process will deploy the new model into the cluster automatically. The framework can also be modified with custom requirements. For example, the framework was included with auto log processing pipelines for the cluster that will generate new datasets to run another model in the system.

Our blog post provides a summary of the overall framework developed by Xeptagon for an inter-governmental organization to run multiple machine learning models at a scale.